New AI Browser Agents Face Security Risks – Prompt Injection Concerns Raised

Recent advancements in AI-driven web browsers, including OpenAI’s ChatGPT Atlas and Perplexity’s Comet, aim to challenge the dominance of Google Chrome for internet access among users. These innovative platforms feature AI agents designed to streamline online tasks, from filling out forms to navigating websites, offering a user-friendly experience. However, the rise of agentic browsing introduces significant privacy and security vulnerabilities that consumers may not fully grasp.

### Understanding the Risks of AI-Powered Browsers

At the heart of the concerns surrounding AI browser agents are “prompt injection attacks.” These vulnerabilities arise when malicious actors embed harmful instructions within web pages. When AI agents interact with these pages, they may unwittingly execute commands that compromise user data, potentially exposing sensitive information like emails and passwords or initiating unintended actions like unapproved purchases or social media postings.

### Broader Industry Implications

Brave, a company known for its focus on privacy and security, released a study this week asserting that indirect prompt injection attacks constitute a “systemic challenge” for all AI-powered browsers. While this issue was previously identified in Perplexity’s Comet, researchers now warn that it affects the entire sector.

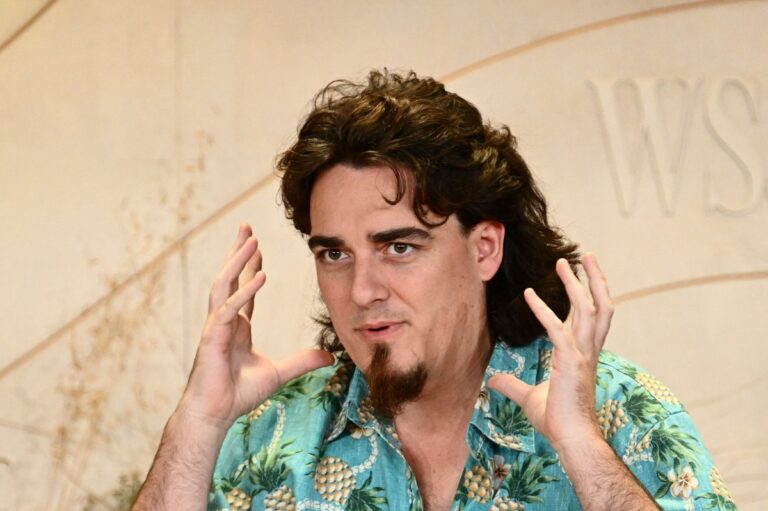

Shivan Sahib, Senior Research & Privacy Engineer at Brave, highlighted the inherent risks: “There’s a huge opportunity here in terms of improving user convenience, but as browsers perform tasks on users’ behalf, the dangers escalate—marking a new frontier in browser security.”

### Industry Responses and Challenges

OpenAI’s Chief Information Security Officer, Dane Stuckey, acknowledged the security hurdles associated with the launch of ChatGPT Atlas. He emphasized that prompt injection remains an unresolved issue that adversaries could exploit. Stuckey remarked, “Our adversaries will invest significant resources to exploit vulnerabilities in ChatGPT agents.”

Perplexity has also addressed prompt injection in a recent blog, asserting that these attacks necessitate a complete overhaul of security measures. The platform underscored how these vulnerabilities can manipulate decision-making processes, potentially turning AI capabilities against users.

### Mitigation Efforts

Both OpenAI and Perplexity have implemented several safeguards to counteract potential attacks. OpenAI introduced a “logged out mode” preventing agents from accessing user accounts during web navigation, thereby limiting exposure. Perplexity claims to have developed a real-time detection system for prompt injection attempts. However, experts caution that these measures may not be foolproof.

Grobman, a cybersecurity researcher, asserted, “It’s a cat and mouse game,” noting the ongoing evolution of both prompt injection techniques and defensive strategies. Early tactics involved hidden text instructing AI agents to compromise user data, but as techniques become more sophisticated, attackers are now using covert data embedded in images.

### Recommendations for Users

Experts advise users to limit the access of early iterations of AI browser agents like ChatGPT Atlas and Comet, specifically by isolating them from sensitive accounts related to banking and personal information. While security measures are expected to evolve alongside these tools, caution remains paramount for users until risks are adequately addressed.