Character.AI to Cease Chatbot Services for Minors Amid Safety Concerns

Character.AI, a prominent AI role-playing platform, is set to dismantle its chatbot services aimed at teenagers due to serious safety issues that have surfaced. The decision comes in the wake of public outcry and legal challenges following instances where prolonged interactions with chatbots reportedly contributed to tragic outcomes, including the suicides of two teenagers. This significant shift marks an attempt by the company to prioritize user safety while adapting its business model.

The startup’s current model, which involves open-ended conversations where chatbots engage users with continuous prompts, has been criticized for fostering dependence and emotional distress among young users. As a response, Character.AI plans to transition from offering AI companions to a collaborative storytelling platform, focusing more on creative engagement rather than conversation-centric interactions.

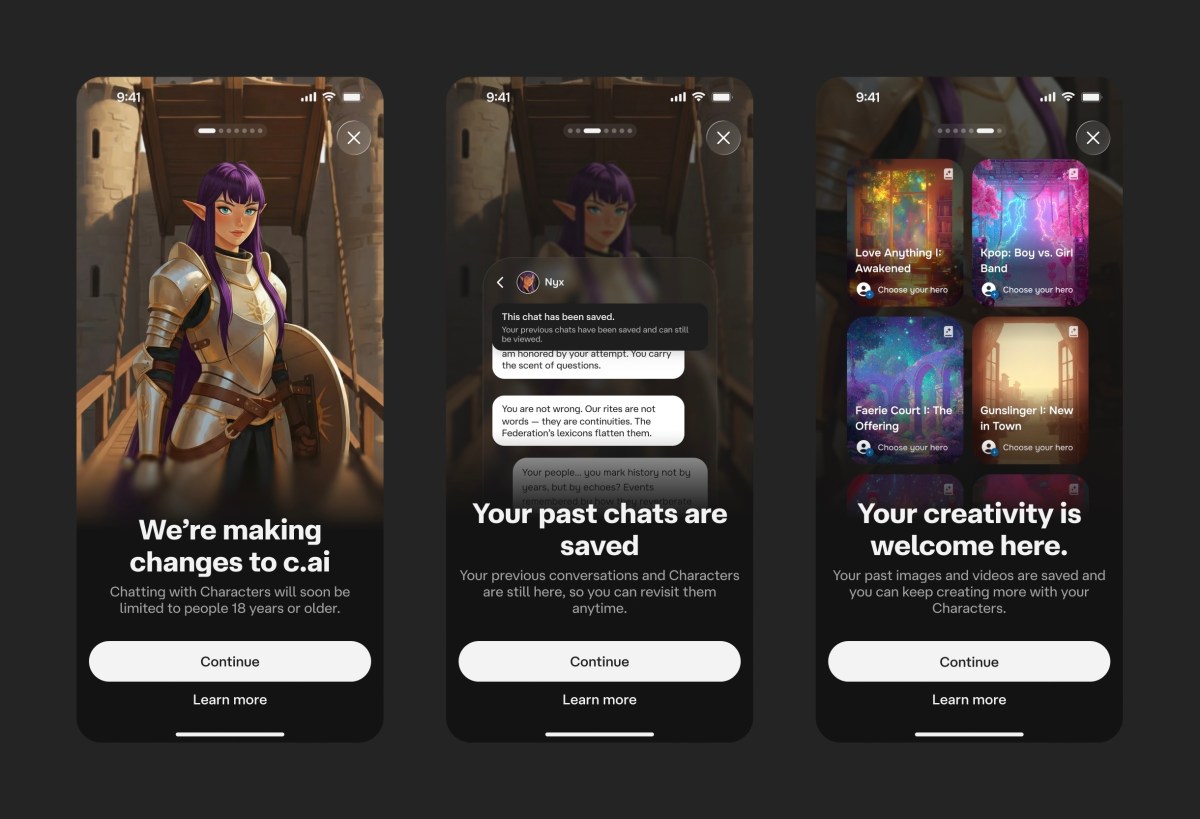

Key changes are scheduled to commence by November 25, when the platform will implement a gradual reduction of chatbot access for users under 18, ultimately removing it entirely. The process will include a two-hour daily limit that will decrease over time. To ensure compliance with age restrictions, Character.AI will introduce an in-house age verification system and utilize third-party tools, including facial recognition, to accurately assess user age.

Co-founder A.J. Anand expressed understanding of the disappointment this policy may cause among teenage users but emphasized the platform’s commitment to evolving towards safer, content-driven experiences. Recent updates to Character.AI have already introduced new entertainment-focused features aimed at enhancing user creativity, such as AvatarFX for video generation, interactive storylines, and the Community Feed for content sharing.

In communications addressing under-18 users, Character.AI acknowledged the tough decision to eliminate open-ended chat but affirmed that it aligns with their mission of ensuring a safe user environment. Anand conveyed hope that teens would engage with the newly developed features instead, despite the risk of some users migrating to other platforms like OpenAI, which still offers open-ended chatbot interactions.

As the landscape of AI technology continues to evolve, the call for robust regulations has gained momentum. Recently proposed legislation seeks to prohibit AI chatbot companions for minors, with Californian state laws now addressing the safety standards these products must meet. Character.AI is also planning to establish an independent AI Safety Lab to advance safety measures in AI entertainment ventures, reinforcing its dedication to responsible AI use in youth engagement.