The Emergence of Moltbot: A Groundbreaking AI Assistant

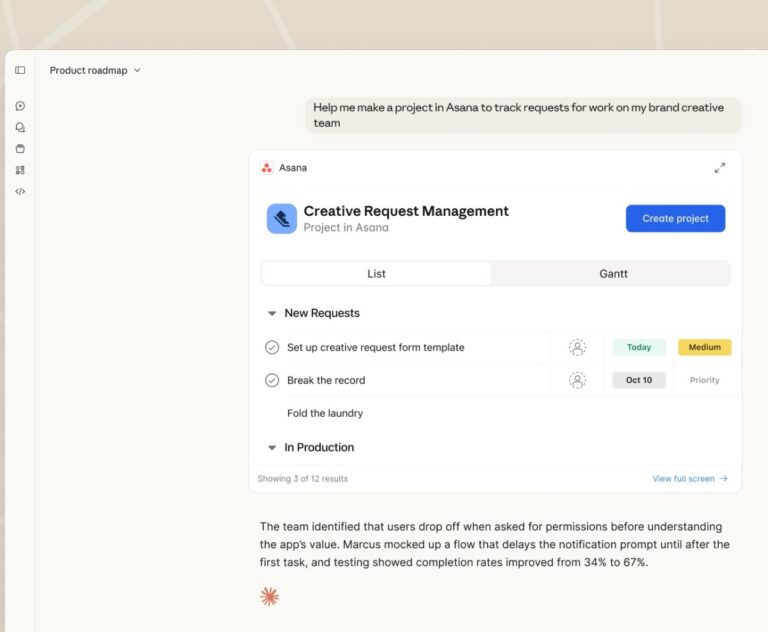

In a remarkable turn of events in the AI landscape, Moltbot, previously known as Clawdbot, has quickly gained widespread popularity since its launch. Despite a name change prompted by a legal challenge from Anthropic, this unique AI assistant, designed with a lobster theme, promises to revolutionize personal digital assistance. With its tagline proclaiming it as “the AI that actually does things,” Moltbot caters to users seeking advanced functionality, including calendar management and messaging through various apps.

Initially conceived as a personal project by Austrian developer Peter Steinberger, known online as @steipete, the tool evolved from his previous venture, PSPDFkit. Following a three-year hiatus from development, Steinberger refocused his efforts on creating Moltbot to simplify his digital life. The nascent AI has quickly attracted a robust user base, boasting over 44,200 stars on GitHub, reflecting strong early adoption among tech enthusiasts.

Significantly, the momentum surrounding Moltbot has had a noticeable impact on cloud service stocks—Cloudflare’s share price surged by 14% amid growing social media buzz, underscoring the commercial potential of such AI advancements. Users drawn to the idea of a personal AI assistant performing tasks for them are eager to explore the capabilities of Moltbot, although the installation process necessitates a certain level of technical expertise.

While Moltbot aims to enhance user efficiency, it also raises important security considerations. As an open-source tool operating locally on users’ machines, it allows developers to inspect its code for vulnerabilities. However, as highlighted by entrepreneur Rahul Sood, the ability of Moltbot to execute commands presents potential risks, particularly concerning “prompt injection through content.” This vulnerability could lead malicious entities to manipulate the AI assistant into executing unintended actions.

To mitigate such risks, users are encouraged to adopt meticulous setup protocols and potentially utilize virtual private servers for operation, thus isolating sensitive data. While more experienced developers may be adept at navigating these challenges, newcomers should exercise caution, particularly regarding data security.

Steinberger himself faced security challenges, including impersonation by crypto scammers following his project’s name change, illustrating the inherent dangers surrounding rapidly popularized technologies. He has advised followers to remain vigilant against scams while confirming the legitimacy of the current Moltbot account.

As Moltbot progresses, the balance between functionality and security remains a pressing concern. Although it offers exciting prospects, prospective users must weigh the technical requirements and risks involved. Still, Moltbot’s development underscores the ongoing evolution of AI assistants, signifying a shift towards truly capable digital aides that may reshape personal productivity in the coming years.