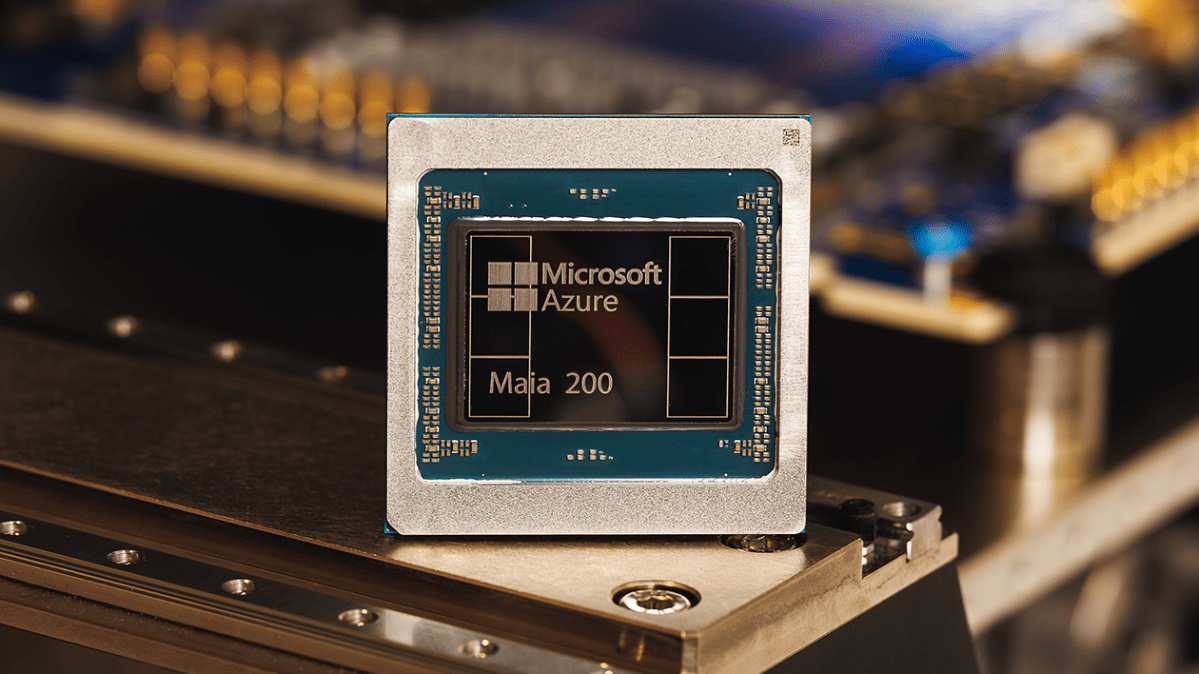

Microsoft introduces its groundbreaking Maia 200 chip, explicitly engineered for AI inference, marking a significant advancement in the company’s silicon capabilities. Following the release of the Maia 100 in 2023, the Maia 200 boasts an impressive architecture with over 100 billion transistors, delivering over 10 petaflops in 4-bit precision and around 5 petaflops at 8-bit performance. This performance uplift signals a major enhancement over its predecessor.

AI inference refers to the computational process of executing a model, as opposed to the training phase, making it a critical aspect of AI operations. As companies expand their AI endeavors, managing inference costs has become increasingly vital. Microsoft aims for the Maia 200 to streamline operations, thereby reducing operational disruptions and power consumption. According to the company, "one Maia 200 node can effortlessly run today’s largest models, with ample capacity for future advancements."

This move by Microsoft aligns with a growing trend among technology leaders to develop proprietary chips, reducing reliance on Nvidia’s GPUs, which have become essential in the AI sector. Competing tech giants like Google, known for its TPUs, and Amazon, with its Trainium series, have adopted similar strategies. Notably, Microsoft claims that the Maia chip outperforms third-generation Amazon Trainium chips by three times in FP4 performance and exceeds Google’s seventh-generation TPU in FP8 benchmarks.

The Maia 200 is already integral to Microsoft’s AI frameworks, including its Superintelligence team and the operations of the Copilot chatbot. Currently, Microsoft has extended invitations to developers, academics, and frontier AI research labs to utilize the Maia 200’s software development kit for their projects.

In summary:

- Chip Name: Maia 200

- Transistor Count: Over 100 billion

- Performance: 10+ petaflops (4-bit), ~5 petaflops (8-bit)

- Optimization Focus: Reducing inference costs and power usage

- Competitive Edge: Superior performance compared to competitors like Amazon’s Trainium and Google’s TPU

- Current Utilization: Supports Microsoft’s AI models and Copilot chatbot, with broader access for developers and researchers.