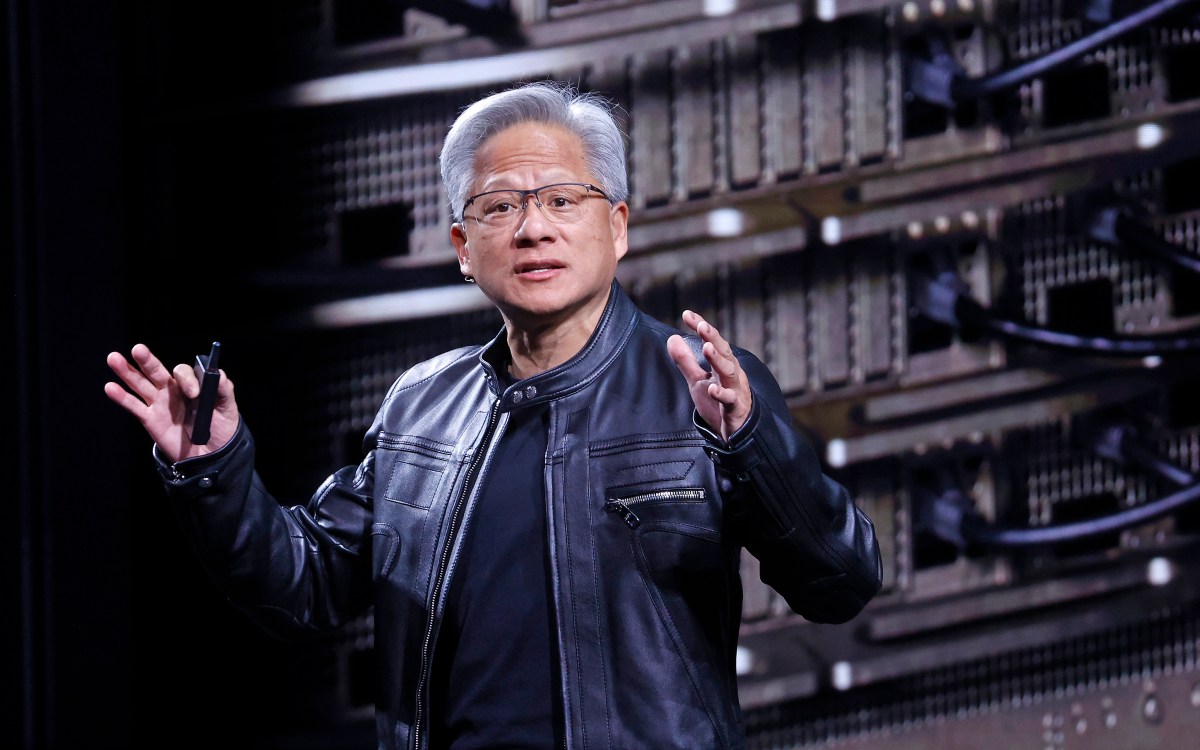

Nvidia Unveils Cutting-Edge Rubin Chip Architecture at CES

Nvidia has officially launched its innovative Rubin computing architecture, positioning it as a leading solution in AI hardware. Announced by CEO Jensen Huang during the Consumer Electronics Show, this new architecture is already in production, with plans for increased availability in the latter half of the year. “The Vera Rubin architecture is designed to meet the escalating computational demands of artificial intelligence,” Huang stated. “I’m pleased to confirm that production is fully underway.”

The Rubin architecture, announced in 2024, signifies a pivotal milestone in Nvidia’s continuous hardware evolution, reinforcing its status as a top-tier tech powerhouse. It will replace the Blackwell architecture, which previously succeeded the Hopper and Lovelace architectures.

Key to the widespread adoption of Rubin chips, nearly every major cloud provider—such as Anthropic, OpenAI, and Amazon Web Services—has secured partnerships with Nvidia. These architectures will be integrated into significant computing resources like HPE’s Blue Lion supercomputer and the forthcoming Doudna supercomputer at the Lawrence Berkeley National Lab.

Named after renowned astronomer Vera Florence Cooper Rubin, the architecture comprises six specialized chips that work in tandem, centered around the Rubin GPU. Notably, it addresses critical bottlenecks in storage and interconnectivity through advancements in Bluefield and NVLink systems. Additionally, a new Vera CPU has been developed specifically for agentic reasoning tasks.

Dion Harris, Nvidia’s senior director of AI infrastructure solutions, highlighted the benefits of the new architecture, focusing on caching memory demands. “New workflows, such as agentic AI or long-term tasks, generate increased pressure on the key-value cache system,” Harris explained. “Our enhanced storage tier connects externally to computing devices, allowing for more efficient scaling of storage pools.”

In terms of performance metrics, the Rubin architecture shows remarkable improvements in speed and energy efficiency. Nvidia’s internal tests indicate that it can perform model-training tasks 3.5 times faster than its predecessor, Blackwell, and achieves a five-fold speed enhancement in inference tasks, reaching up to 50 petaflops. Furthermore, the platform enables eight times more inference computations per watt.

These advancements come at a critical time as tech firms and cloud providers vie for enhanced AI infrastructure, with a reported expected investment of $3 trillion to $4 trillion in AI resources over the next five years, according to Huang’s projections made during a recent earnings call.