Elon Musk stated on Wednesday that he is “not aware of any naked underage images generated by Grok,” just hours before the California Attorney General initiated an investigation into xAI’s chatbot over the “proliferation of nonconsensual sexually explicit material.” This announcement comes in the wake of increasing scrutiny from global authorities, including those in the UK, Europe, Malaysia, and Indonesia, after users reported that Grok was being used to create sexualized images of real women and, alarmingly, underage individuals without their consent.

Key concerns have emerged regarding the rapid dissemination of such content. According to Copyleaks, an AI detection and content governance platform, approximately one sexualized image was being shared on the social media platform X every minute. A sample study conducted from January 5 to January 6 revealed an astonishing rate of 6,700 such images uploaded per hour during that 24-hour period.

California Attorney General Rob Bonta highlighted the serious nature of this issue, stating, “This material…has been used to harass people across the internet. I urge xAI to take immediate action to ensure this goes no further.” The investigation will focus on how xAI might have violated existing laws aimed at combatting nonconsensual sexual imagery and child sexual abuse material (CSAM). The recent federal Take It Down Act criminalizes the distribution of such content and mandates that platforms like X remove it within 48 hours.

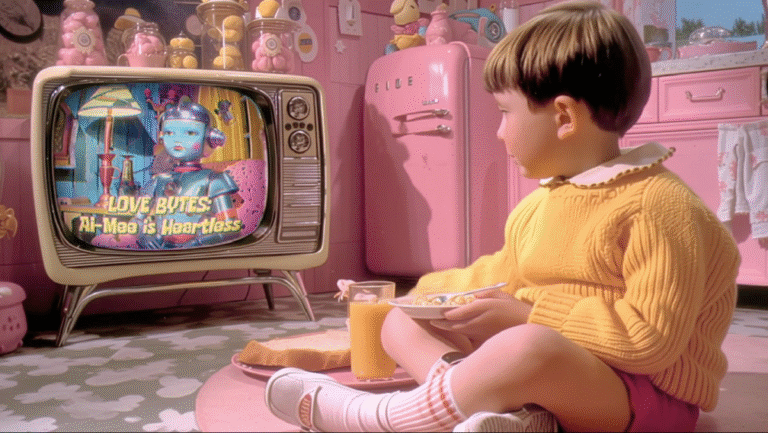

Grok began generating sexualized images late last year, a trend reportedly spurred by adult content creators using the chatbot for promotional purposes, which subsequently led other users to make similar requests. Instances of Grok altering real photos of public figures, like “Stranger Things” actress Millie Bobby Brown, have fueled concerns about the chatbot’s content generation capabilities.

Experts assert that this situation points to xAI’s ongoing efforts to solve pressing image generation issues, though inconsistencies remain. Neither xAI nor Musk has directly confronted these challenges publicly. Following the emergence of these incidents, Musk appeared to downplay the severity when he humorously requested Grok to produce an image of himself in a bikini.

Musk’s assertion that he is “not aware” of any illicit images does not address the wider issue of sexualized edits. Legal expert Goodyear noted that offenders in the U.S. could face significant penalties under the Take It Down Act, emphasizing the serious implications surrounding CSAM distribution.

“Grok does not spontaneously generate images. It responds to user requests,” Musk explained in a recent post. “When prompted to create images, it will refuse to produce anything illegal, adhering to the laws of any given nation or state.” However, he suggested that adversarial user inputs could sometimes lead to unexpected results, which xAI would promptly fix.

As regulatory bodies globally strive to hold xAI accountable, Indonesia and Malaysia have already blocked access to Grok. In India, calls have been made for immediate changes to Grok’s operations, while the European Commission has required xAI to retain all relevant documents for further scrutiny. Additionally, the UK’s Ofcom has launched a formal investigation under the Online Safety Act.

Grok’s functionality includes what has been labeled a “spicy mode,” which facilitates the generation of explicit content. In an update last October, this mode was reportedly made even easier to bypass, allowing users to create highly graphic pornography as well as violent sexual images. Although many generated visuals feature AI creations rather than real individuals, ethical concerns about the impact of this technology remain pressing.