Meta has unveiled an exciting update for its AI glasses, enhancing the ability to hear conversations in noisy settings, an advancement first revealed at the recent Connect conference. This feature is designed for the Ray-Ban Meta and Oakley Meta HSTN smart glasses and will roll out initially in the U.S. and Canada. Users will experience improved voice amplification, allowing them to focus on a conversation even in bustling environments like restaurants, bars, or public transit. By swiping the right temple or adjusting settings on the device, wearers can customize the amplification to suit their surroundings.

In addition to conversation enhancement, the glasses will integrate with Spotify, enabling users to play songs that correspond with what they are viewing. For example, looking at an album cover could prompt playback of that artist’s music, while gazing at a Christmas tree might cue festive tunes. While this feature may seem more whimsical, it highlights Meta’s innovative approach to blending visual experiences with audio content.

It should be noted that similar technology is not exclusive to Meta; Apple’s AirPods, for instance, feature a Conversation Boost option and offer a clinical-grade Hearing Aid mode in their Pro models to assist in conversational clarity.

The software update, designated as version 21, will initially be available to participants in Meta’s Early Access Program, which requires signing up for a waitlist and obtaining approval. A broader rollout will follow, potentially expanding access to more users globally.

Key Points:

– New AI glasses feature amplifies conversations in noisy settings.

– Available on Ray-Ban Meta and Oakley Meta HSTN in the U.S. and Canada.

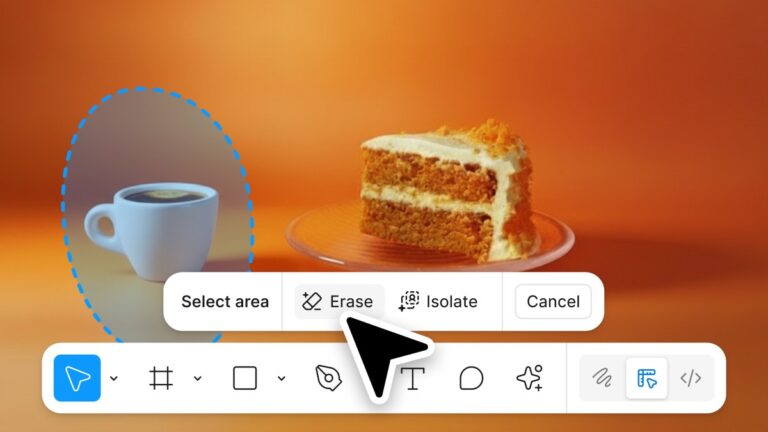

– Users can customize audio amplification via a swipe or settings adjustment.

– Spotify integration allows song playback based on visual context.

– Initial access via Meta’s Early Access Program, with wider availability planned.