Dr. Sina Bari, a practicing surgeon and an AI healthcare expert at iMerit, has observed the potential risks associated with AI chatbots like ChatGPT, particularly when they provide flawed medical advice. In one instance, he discovered misleading information related to a specific medication intended for a subgroup of tuberculosis patients, which was irrelevant to his case. While such experiences raise valid concerns, Bari remains optimistic about the recently announced ChatGPT Health, which is set to launch in the coming weeks.

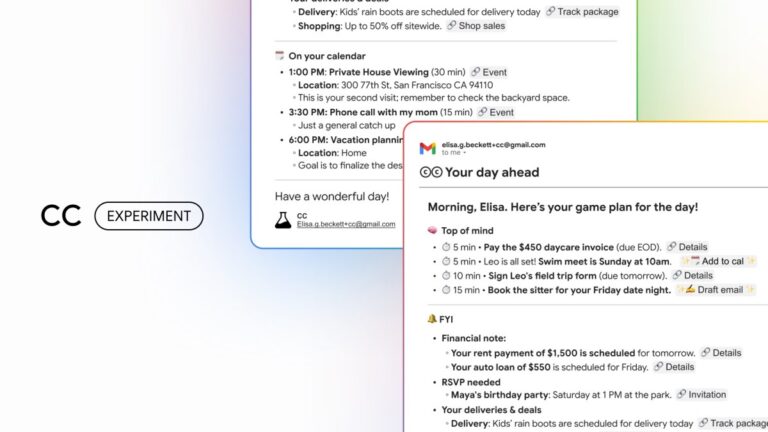

ChatGPT Health will offer a more secure environment for users, promising that their interactions will not be utilized as training data for the AI model. “It’s great,” said Dr. Bari, acknowledging the move towards safer patient engagement while enhancing the chatbot’s utility. This new feature allows users to upload their medical records and integrate with health apps like Apple Health and MyFitnessPal for tailored guidance.

Despite the potential security worries, many industry experts believe the shift towards AI-assisted healthcare is already underway, as over 230 million people consult ChatGPT weekly for health-related issues. However, the problem of AI hallucinations remains a significant concern in healthcare. Recent evaluations indicate that OpenAI’s GPT-5 is more susceptible to inaccuracies than certain models from Google and Anthropic, highlighting the critical need for rigorous accuracy in medical AI solutions.

Dr. Nigam Shah, a medicine professor at Stanford University and chief data scientist at Stanford Health Care, argues that the pressing issue is not the risk of inaccuracies from AI but rather the alarming delays in patient access to care. With wait times for primary care appointments often extending between three to six months, Shah proposes that utilizing AI for preliminary assessments could ease patient burdens.

He advocates for integrating AI into healthcare systems at the provider level. Current medical literature suggests that administrative tasks consume nearly half of a primary care physician’s time, limiting their availability to patients. Automating these tasks could significantly increase patient throughput and diminish reliance on chatbots for medical inquiries.

Shah’s team is developing ChatEHR, a tool designed to streamline access to electronic health records, enabling doctors to allocate more time to patient consultations. As Dr. Sneha Jain, a ChatEHR early tester, notes, “Improving electronic medical records makes it easier for physicians to focus on critical patient interactions.”

Meanwhile, Anthropic has introduced Claude for Healthcare, aimed at enhancing efficiency for clinicians and insurers by automating mundane tasks like prior authorization requests. “Imagine cutting twenty, thirty minutes out of each case—it’s a dramatic time saver,” asserted Anthropic CPO Mike Krieger at a recent healthcare conference.

The interplay between AI technology and medicine presents inherent challenges. While healthcare professionals prioritize patient welfare, tech companies are accountable to shareholders, leading to potential conflicts. “This tension is crucial,” Dr. Bari emphasized, stressing the need for a cautious approach to ensure patient safety.

This evolving landscape highlights the dual-edge nature of AI in healthcare, showcasing its potential to enhance efficiency while also requiring careful management to ensure patient safety and care quality.