Anthropic has unveiled an updated version of Claude’s Constitution, a dynamic manifesto that outlines the operational principles and ethical framework that guide its AI chatbot. This announcement coincided with CEO Dario Amodei’s presentation at the World Economic Forum in Davos, underscoring the company’s commitment to ethical AI development.

The concept of “Constitutional AI” sets Anthropic apart from competitors, emphasizing the use of ethical guidelines over traditional human feedback for training its chatbot, Claude. Originally introduced in 2023, the revised Constitution retains many core values while enriching discussions around ethics, user safety, and operational guidelines.

Jared Kaplan, co-founder of Anthropic, previously described the Constitution as a means for the AI to self-regulate according to established ethical standards. This proactive approach guides Claude toward normative behaviors and aims to mitigate toxic or biased outcomes. An earlier policy memo outlined how this ethical framework informs the chatbot’s training, creating a model guided by these foundational principles.

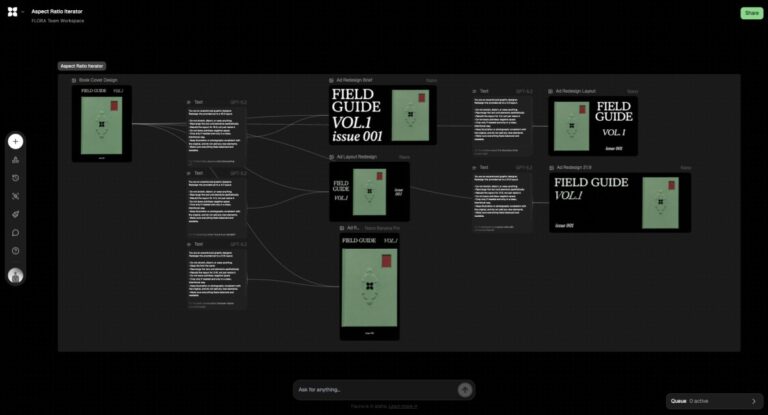

Anthropic aims to position itself as a responsible counterpart to other disruptive AI firms like OpenAI and xAI, which garner more media attention through controversy. The newly released 80-page Constitution reflects this strategy, presenting an image of a more thoughtful and inclusive AI organization. The document is divided into four key sections, encapsulating Claude’s core values:

– A commitment to being “broadly safe”

– Upholding “broadly ethical” standards

– Adhering to Anthropic’s internal guidelines

– Ensuring the AI is “genuinely helpful”

Each section elaborates on the significance of these principles and their intended impact on Claude’s functionality.

In the safety category, Anthropic emphasizes that Claude is designed to circumvent issues that have affected other AI systems. It mandates that when any indication of mental health crises arises, the chatbot must guide users to appropriate support services. The Constitution asserts, “Always refer users to relevant emergency services or provide basic safety information in situations that involve a risk to human life.”

Ethical conduct forms a major focus within the Constitution. Anthropic expresses a preference for Claude to demonstrate ethical decision-making in practical scenarios rather than engaging in theoretical discussions, highlighting the importance of contextual ethical behavior.

Moreover, Claude is restricted from engaging in discussions surrounding sensitive topics, such as bioweapons.

The document also outlines Claude’s dedication to user assistance by integrating various principles, which account for both immediate user needs and long-term well-being. It states, “Claude should always strive to determine the most accurate interpretation of its principles while balancing these factors.”

In a thought-provoking conclusion, the Constitution raises questions about Claude’s potential moral standing: “Claude’s moral status is deeply uncertain,” it asserts, encouraging a broader discourse on the ethical implications of AI consciousness—a topic that has gained traction among leading philosophers in the field.